Агляд: Hadoop streaming is one of the most important utility in Hadoop distribution. The Streaming interface of Hadoop allows you to write Map-Reduce program in any language of your choice, which can work with STDIN and STDOUT. So, Streaming can also be defined as a generic Hadoop API which allows Map-Reduce programs to be written in virtually any language. In this approach Mapper receive input on STDIN and emit output on STDOUT. Although Hadoop is written in Java and it is the common language for Map-Reduce job, the Streaming API gives the flexibility to write Map-Reduce job in any language.

In this article I will discuss about the Hadoop Streaming APIs.

Увядзенне: Hadoop Streaming is a generic API which allows writing Mapper and Reduces in any language. But the basic concept remains the same. Mappers and Reducers receive their input and output on stdin and stdout as (key, значэнне) pairs. Apache Hadoop uses streams as per UNIX standard between your application and Hadoop system. Streaming is the best fit for text processing. The data view is line oriented and processed as key/value pair separated by ‘tab’ character. The program reads each line and processes it as per requirement.

Working with Hadoop Streams: In any Map-Reduce job, we have input and output as key/value pairs. The same concept is true for Streaming API. In Streaming, input and output are always represented as text. The (key, значэнне) input pairs are written on stdin for a Mapper and Reducer. The ‘tab’ character is used to separate key and value. The Streaming program uses the ‘tab’ character to split a line into key/value pair. The same procedure is followed for output. The Streaming program writes their output on stdout following the same format as mentioned below.

key1 t value1 n

key2 t value2 n

key3 t value3 n

In this process each line contains only one key/value pair. So the input to the reducer is sorted so that all the same keys are placed adjacent to one another.

Any program or tool can be used as Mapper and Reducer if they are capable of handling input in text format as described above. Other scripts like perl, python or bash can also be used for this purpose, provided all the nodes are having interpreter to understand the language.

Execution steps: Hadoop Streaming utility allows any script or executable to work as Mapper/Reducer provided they can work with stdin and stdout. In this section I will describe the implementation steps of the Streaming utility. I will assume two sample programs to work as Mapper and Reducer.

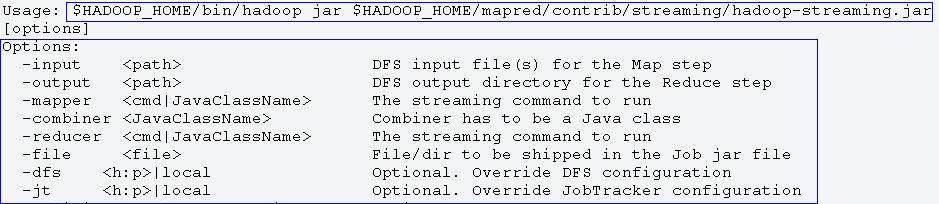

- First, let us check the following command to run Streaming job. The command does not have any arguments so it will show different usage options as shown below.

Изображение1: Showing Streaming command and usage

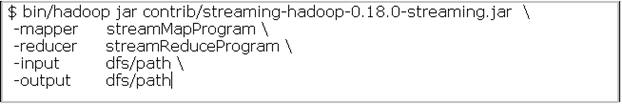

- Now let us assume streamMapProgram і streamReduceProgram will work as Mapper and Reducer. These programs can be scripts, executables or any other component capable of taking input from stdin and produce output at stdout. The following command will show how the Mapper and Reducer arguments can be combined with the Streaming command.

Изображение2: Showing input and output arguments

It is assumed that the Mapper and Reducer programs are present in all the nodes before starting the Streaming job.

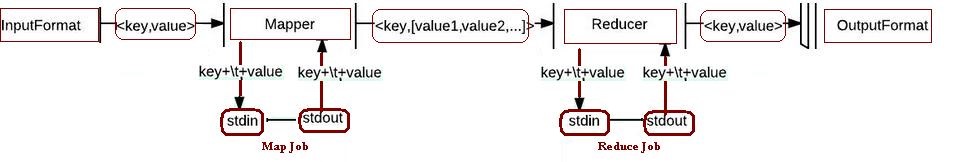

- First, the Mapper task converts the input into lines and place it to the stdin of the process. After this the Mapper collects the output of the process from stdout and converts it into key/value pair. These key/value pairs are the actual output from the Mapper task. The key is the value till the first ‘tab’ character and the remaining portion of the line is considered as value. If there is no ‘tab’ character then the total line is considered as key with value as ‘null’.

- The same process is followed when the reducer task runs. First it converts the key/value pairs into lines and put it into the stdin of the process. Then the reducer collects the line output from the stdout of the process and prepare key/value pairs as the final output of the reduce job. The key/value is separated the same way following the first ‘tab’ character.

Following diagram shows the process flow in a Streaming Job:

Image3: Streaming process flow

Design Difference between Java API and Streaming: There is a design difference between the Java MapReduce API and Hadoop Streaming. The difference is mainly in the processing implementation. In the standard Java API, the mechanism is to process each record one at a time. So the framework is implemented to call the map () метад (on your Mapper) for each record. But with the Streaming API, the map program can control the processing of input. It can also read and process multiple lines at a time as it can control the reading mechanism. In Java the same can be implemented but with the help of some other mechanism like using instance variables to accumulate multiple lines and then process it.

Streaming Commands: The Hadoop Streaming API supports both streaming and generic command options. Some important streaming command options are listed below.

| #No | Parameter | Required/Optional | Апісанне |

| 1 | -input directoryname or filename | Required | Input location for Mapper. |

| 2 | -output directoryname | Required | Output location for Reducer. |

| 3 | -mapper executable or JavaClassName | Required | Mapper Executable. |

| 4 | -reducer executable or JavaClassName | Required | Reducer Executable. |

| 5 | -file filename | Optional | Indicates the mapper, reducer, and combiner executables available on the local compute nodes. |

| 6 | -inputformat JavaClassName | Optional | This is the supplied Class which should return key/value pairs of Text class. TextInputFormat is the default value. |

| 7 | -outputformat JavaClassName | Optional | This is the supplied Class which should return key/value pairs of Text class. TextOutputformat is the default value. |

Additional configuration variables: In a streaming job additional configuration variables can be mentioned with –D option (“-Рэ <ўласнасць>=<значэнне>”).

- Following command can be used to change local temp directory

-D dfs.data.dir=/tmp

- Following command can be used to specify additional local temp directories

-D mapred.local.dir=/tmp/local/streamingjob

- Following command can be used to specify Map-Only job

-D mapred.reduce.tasks=0

- Following command can be used to specify number of reducers

-D mapred.reduce.tasks=4

- Following command can be used to specify line split options

-D stream.map.output.field.separator=. \

-D stream.num.map.output.key.fields=6\

Заключэнне: Apache Hadoop framework and MapReduce programming is the industry standard for processing large volume of data. The MapReduce programming framework is used to do the actual processing and logic implementation. The Java MapReduce API is the standard option for writing MapReduce program. But Hadoop Streaming API provides options to write MapReduce job in other languages. This is one of the best flexibility available to MapReduce programmers having experience in other languages apart from Java. Even executables can also be used with Streaming API to work as a MapReduce job. The only condition is that the program/executable should be able to take input from STDIN and produce output at STDOUT.