YARN

Overview: Since hadoop version 0.23, MapReduce has changed significantly. It is now known as MapReduce 2.0 or YARN. MapReduce 2.0 is based on the concept of splitting the two major functionalities of job tracker – resource management and job scheduling into separate daemons.

In this document, I will talk about the YARN/MapReduce 2.0 and the functionalities it presents in detail.

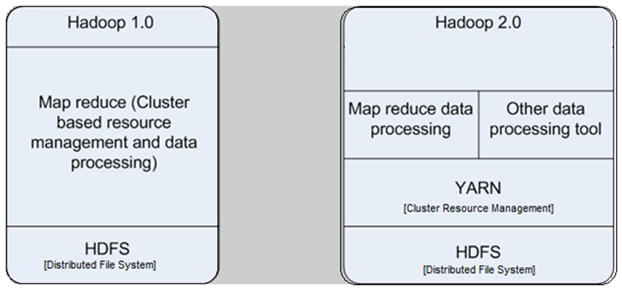

Introduction: YARN stands for “Yet Another Resource Negotiator”. YARN/MapReduce2 has been introduced in Hadoop 2.0. YARN is a layer that separates the resource management layer and the processing components layer. The need was to have a broader array of interaction model for the data stored in HDFS that is after the Map-reduce layer. The following picture explains the architecture diagram of Hadoop 1.0 and Hadoop 2.0/YARN.

Figure 1: Hadoop 1.0 and 2.0 architecture

YARN takes care of the resource management tasks which were performed by the Map reduce in the earlier version. This allows the Map reduce engine to take care of its own task which is processing data. Having the YARN layer, allows us to run multiple applications on Hadoop sharing a common resource management layer.

Features of YARN:

YARN has the ability to enhance the power of cluster computing using Hadoop by giving the following features –

- Scalability – Since the primary focus of YARN is scheduling, it can manage these huge clusters more efficiently. The ability to process data goes up rapidly.

- Compatibility with existing Map Reduce based application – YARN can easily configure and run the existing Map Reduce application without any hindrance or modification in their existing process.

- Better Cluster Utilization – YARN Resource Manager optimizes the cluster utilization as per the given criteria e.g. capacity guarantees, fairness, and other Service Level Agreements.

- Support for additional workloads apart from Map Reduce – Upcoming programming models e.g. graph processing and iterative modelings are now a part of data processing. These new models are easily integrated with YARN which helps the senior management in any organization to realize their real time data and other market trends.

- Agility – YARN facilitates the operation of the resource management layer in a more Agile manner.

Components of YARN framework:

YARN is based on the basic concept of – ‘Divide and Rule’. YARN splits the two major responsibilities of Job tracker and task tracker into the following separate entities –

- Global Resource Manager

- Application Master per application

- Node Manager per node slave

- Container per application running on Node manager.

How YARN works?

The Resource Manager and the Node Manager together form the new, and generic, system. This system is used to manage applications in a distributed manner. The Resource Manager is the supreme authority which controls the resources among all the applications in the system. The Application Master per-application is a framework-specific entity and takes up the task of negotiation of resources with the Resource Manager and working with the Node Manager to execute and monitor the other component tasks.

Resource Manager: The Resource Manager has an inbuilt scheduler, which allocates resources to the running applications, as per the user defined constraints such as queue capacities, user-limits etc. The scheduler performs its task of scheduling based on the resource requirements of the applications. The Node Manager is per-machine slave, which launches the container of the application, monitors their resource usage (cpu, memory, disk, network) and reports the same to the Resource Manager. Each Application Master is responsible for negotiating the appropriate resource containers from the scheduler, tracking their status, and monitoring their progress. From the system point of view, the Application Master is the container which has the control of the entire application.

The Resource Manager lies at the root of the YARN hierarchy. This is the entity which governs the entire cluster and also controls the assignment of applications of the other resources. The Resource Manager takes care of division of resources e.g. compute, memory, bandwidth etc. to all the Node Managers below it. The Resource Manager also takes up the task of allocating resources to the Application Masters and monitors the underlying applications on the Node Managers. Thus the Application Master takes up the job of task tracker and the Resource Manager takes up the role of the Job Tracker.

Application Master: The Application Master is responsible for managing each and every instance of applications which runs within the YARN. The Application Master does the negotiation of the resources from the Resource Manager and, using the Node Manager, monitors the execution and resource consumption of containers e.g. resource allocations of CPU, memory, etc.

Node Manager: The Node Manager is responsible for managing each and every node within the YARN cluster. The Node Manager provides the services per-node within the cluster. These are variety of services ranging from monitoring the management of a container and its life cycle to monitoring the resources and keeping a track of the health and usage of resources of each node. In contrast to the Map Reduce version 1.0, which used to manage the execution of map and reduce tasks via slots, the Node Manager manages abstract containers, which allocates and represents resources per node available for a particular application. YARN also uses the HDFS layer, with the master Name Node for metadata services and Data Node for replicated storage services across a cluster.

YARN cluster: YARN cluster comes in to the picture whenever there is a request from a client of any application. The Resource Manager starts negotiating for the necessary resources for the container and invokes an Application Master. This represents that the application is submitted. Using a resource-request protocol, the Application Master negotiates on the resource containers for the application at each node. Once the application’s execution is over, the Application Master keeps a watch on the container till completion. Once the application is completed, the Application Master de registers the containers from the Resource Manager, and then the cycle completes.

Difference between MapReduce1 and MapReduce2/YARN: It is important to note that the earlier version of Hadoop architecture was highly constrained via the Job Tracker. This Job Tracker was responsible for managing the resources and scheduling jobs across the cluster. The current YARN architecture allows the new Resource Manager to manage the usage of resources across all applications. While the Application Masters takes up the responsibility of managing the job execution. This approach improves the ability to scale up the Hadoop clusters to a much larger configuration than it was previously possible. In addition to this, YARN permits parallel execution of a range of programming models. This includes graph processing, iterative processing, machine learning, and general cluster computing.

With the help of YARN, we can create more complex distributed applications.

Summary: MapReduce framework is one of the most important parts of big data processing. In earlier version of MapReduce, the components were designed to address basic needs of processing and resource management. After that it has evolved to a much improved version know as MapReduce2/YARN, providing improved features and functionalities.

Let us summarize our discussion on the form of following bullets –

- YARN stands for ‘Yet Another Resource Negotiator’.

- YARN is introduced along with Hadoop 2.0.

- YARN provides the following features –

- Scalability

- Compatibility with existing Map Reduce based application

- Better Cluster Utilization

- Support for additional workloads apart from Map Reduce

- Agility

- YARN splits the two major responsibilities of Job tracker and task tracker into separate entities as mentioned below.

- Global Resource Manager.

- Application Master per application.

- Node Manager per node slave.

- Container per application running on Node manager.