Overview:

In the current technology landscape, big data and analytics are the two most important areas where people are taking lot of interest. The obvious reason behind this traction is – enterprises are getting business benefit out of these big data and BI applications. Hadoop is now become a main stream technology, so its coverage and discussion is also spreading beyond tech media. But, what we have observed is – people still find it difficult to understand the actual concepts, and often make some vague idea about Hadoop and other related technologies.

In this article, our honest effort is to explain the Hadoop key terms in a very simple way, so that technical and non-technical audience can understand it.

Hadoop eco-system – What it exactly means?

Hadoop is a very powerful open source platform managed by Apache Foundation. Hadoop platform is built on Java technologies and capable of processing huge volume of heterogeneous data in a distributed clustered environment. Its scaling capability makes it a perfect fit for distributed computing.

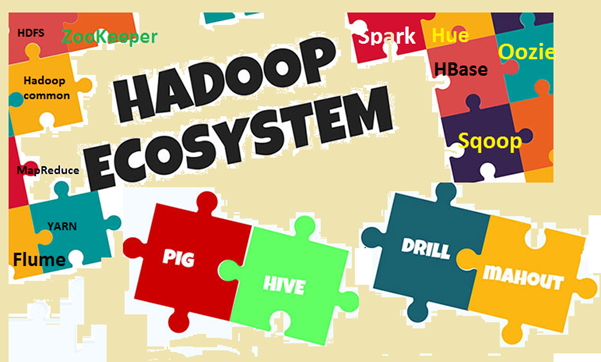

Hadoop eco-system consists of Hadoop core components and other associated tools. In the core components, Hadoop Distributed File System (HDFS) and the MapReduce programming model are the two most important concepts. Among the associated tools, Hive for SQL, Pig for dataflow, Zookeeper for managing services etc are important. We will explain these terms in details.

Image1: Hadoop eco-system

Why you need to know the key terms?

We have already discussed that Hadoop is a very popular topic nowadays, and everybody is talking about it, knowingly or unknowingly. So the problem is- if you are discussing something or listening to something, but not aware what it exactly means, then you will not be able to connect the dots or digest it. The problem is more visible when the people are from a different domain, like business people, marketing guys, top management etc. Because these people do not need to know ‘How Hadoop works?‘, rather they are more interested to know ‘how it can bring business benefit’. To realize the business benefit, a little bit of understanding of Hadoop terms are very much important across all layers. But at the same time, the terms should be explained in simple way without complex jargons, making the readers comfortable.

Let’s understand the key terms

In this section we will explore different terms in Hadoop and its eco-system, with some explanation. For clarity in understanding, we will make two broad categories, one is the base module and the other one is the additional software packages and tools which can be installed separately or on top of Hadoop. Hadoop refers to all these entities.

First, let us have a look at the terms which comes under base module.

- Apache Hadoop: Apache Hadoop is an open-source framework for processing large volume of data in a clustered environment. It uses simple MapReduce programming model for reliable, scalable and distributed computing. The storage and computation both are distributed in this framework.

- Hadoop common: As the name suggests, it contains common utilities to support different Hadoop modules. It is basically a library of common tools and utilities. Hadoop common is mainly used by developers during application development.

- HDFS: HDFS (Hadoop Distributed File System) is a distributed file system spans across commodity hardware. It scales very fast and provides high throughput. Data blocks are replicated and stored in a distributed way on a clustered environment.

- MapReduce: MapReduce is a programming model for parallel processing of large volume of data in a distributed environment. MapReduce program has two main components, one is the Map () method, which performs filtering and sorting. The other one is the Reduce () part, designed to perform summary of the output from the Map part.

- Yet Another Resource Negotiator (YARN): It is basically a resource manager available in Hadoop 2. The role of YARN is to manage and schedule computing resources in a clustered environment.

Now, let us check the other related terms in Hadoop

- HBase: HBase is an open source, scalable, distributed and non-relational database. It is written in Java and based on Google’s Big Table. The underlying storage file system is HDFS.

- Hive: Hive is data warehouse software, which supports reading, writing and managing large volume of data stored in a distributed storage system. It provides SQL like query language known as HiveQL (HQL), for querying the dataset. Hive supports storage in HDFS and other compatible file systems like Amazon S3 etc.

- Apache Pig: Pig is a high level platform for large data set analysis. The language to write Pig scripts are known as Pig Latin. It basically abstracts the underlying MapReduce programs and makes it easier for developers to work on MapReduce model without writing the actual code.

- Apache Spark: Spark (open source) is a cluster computing framework and general compute engine for Hadoop data (large scale data-set). It performs almost 100 times faster compared to MapReduce in memory. And, for disk, it is almost 10 times faster. Spark can run on different environments/mode like stand-alone mode, on Hadoop, on EC2 etc. It can access data from HDFS, HBase, Hive or any other Hadoop data source.

- Sqoop: Sqoop is a command line tool to transfer data between RDBMS and Hadoop data bases. It is mainly used for import/export data between relational and non-relational databases. The name ‘Sqoop’ is formed by combining the initial and last part of two other terms ‘Sql+Hadoop’.

- Oozie: Oozie is basically a Hadoop work flow engine. It schedules work flows to manage Hadoop jobs.

- ZooKeeper: Apache ZooKeeper is an open source platform, which provides high performance coordination service for Hadoop distributed applications. It is a centralized service for maintaining configuration information, naming registry, distributed synchronization and group services.

- Flume: Apache Flume is a distributed service, mainly used for data collection, aggregation and movement. It works very efficiently with large amount of log and event data.

- Hue: Hue is basically a web interface for analyzing Hadoop data. It is open source project, supports Hadoop and its eco-system. Its main purpose is to provide better user experience. It provides drag and drop facilities and editors for Spark, Hive and HBase etc.

- Mahout: Mahut is open source software for building scalable machine learning and data mining applications quickly.

- Ambari: Ambari is basically a web based tool for monitoring and managing Hadoop clusters. It includes support for eco-system services and tools like HDFS, MapReduce, HBase, ZooKeeper, Pig, Sqoop etc. Its three main functionalities are provisioning, managing and monitoring Hadoop clusters.

As Hadoop eco-system is continuously evolving, new software, services and tools are also emerging. As a result, there will be new terms and jargons in the big data world. We need to keep a close watch and understand those in time.

Conclusion

In this article we have tried to identify the most important key terms in the Hadoop eco-system. We have also discussed a little bit about the eco-system and why we need to know the terms. Hadoop is now become a main stream technology, so people are getting more involved into it. So, it is the right time to understand some basic concepts and terms used in the Hadoop world. In future, there will a lot of new concepts and terms available, and we must update ourselves accordingly.